Generative AI has been on a meteoric rise in the last twelve months. Since the launch of the AI-powered language model ChatGPT in November 2022, monthly visits to the site have already reached 1.5 billion. Financial firms have taken notice of this, and AI has quickly become the new frontier for private equity investment. As of Q2 2023, private equity funding in Generative AI startups topped $14.1 billion, $11.6 billion more than in 2022.

Evidently, AI is here to stay. Yet, one of the major debates that surfaces is data quality and how this feeds into AI generated outcomes. This is not a novel concern. Back in 2018, Amazon’s AI recruiting tool showed bias against women. Due to limited datasets based on predominantly male resumes, the tool downgraded applications with the word ‘women’.

Much has already been said about how data quality can influence the results of generative AI. But how can developers ensure that they are using the best training data to create an effective AI model?

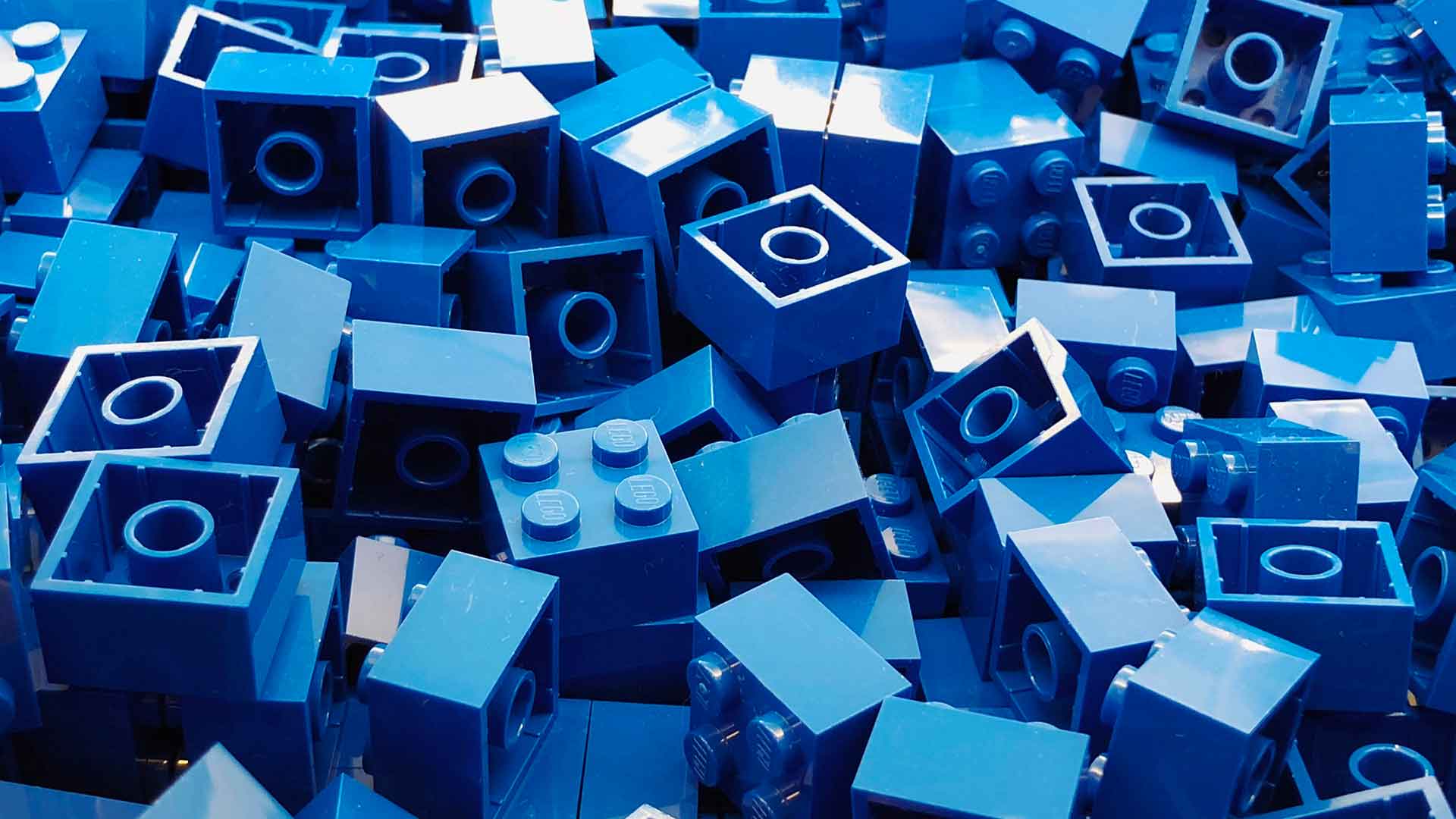

Can you make bricks without good clay?

There’s no denying that AI is transforming many areas of society for the better. Preliminary results for AI use in breast cancer screening have shown to almost half human workload. However, the biases that could accompany AI tools in recruitment, HR, or policing, is a worrying prospect to be addressed.

For the vast majority of firms, the focus needs to be on taking practical steps in the initial stages of AI deployment to help mitigate any discrepancies. AI algorithms dictate the results of these models, enabling them to perform tasks at a faster and more efficient rate.

A recent example from Octopus Energy shows AI’s potential in customer support. AI automated emails achieved an 80 per cent customer satisfaction rate, 15 per cent higher than skilled, trained people. These algorithms enable models to learn, analyse data and make decisions based on that knowledge. But real-time AI demands large amounts of data, so it is crucial to source and use data in an ethical manner.

Starting with data from various sources, and ensuring it’s representative of the entire population, will help mitigate potential biases and inaccuracies that might arise in case of a narrow subset of data. Creating diverse data engineering teams for AI and machine learning, that reflect the people using and impacted by the algorithm, will also help to lessen these biases over time.

Accounting for biases at data level can be more difficult, especially for unsupervised learning algorithms. Any flaws in the original data set will manifest in the generated models. Therefore, designing the tools to filter out biases can reduce the quality of the model. This is where out-of-the-box approaches to rulesets are being developed for better outcomes.

Follow the crowd or break the mould?

Conventional methods of data sourcing, like data labelling where clear descriptions or markers are added to help categorise large datasets, are based on well-established practices. It can help provide reliable results if the data quality is high. However, poor data will propagate biases, and these established methods can often be difficult to break away from. To that end, within the last year more and more tech companies are moving away from this, using large language models (LLMs) to jumpstart the data analysing process.

LLMs are a type of AI algorithm that uses deep learning to classify and categorise large datasets for greater efficiency and speed into their operation. Through their language understanding and generation capabilities, LLMs can detect potential discrepancies in the data that might propagate bias outcomes. Customised LLM platforms are beginning to filter into different sectors, one being cybersecurity. Large tech companies are looking to expand into this space, for example Google recently announced their Google Cloud Security AI Workbench.

However, an issue is that LLMs can potentially be manipulated to leak information, especially if misleading information is added to the training data used to fine-tune the model. For users it can be difficult to verify if the initial training data has been tampered with as there is often limited information or transparency available regarding the integrity of training data. Despite this, it’s encouraging that these new technologies can help aid the identification of potentially incorrect or misleading information.

As these technologies are still in the developmental phase, it would be detrimental to completely move away from more traditional predictive features like data labelling, especially when LLMs might not fully grasp the complexities of certain data types or contexts, making human labelling necessary for improved accuracy.

Will upcoming regulation provide an answer?

With the UK government due to publish a series of tests that need to be met to pass new laws on AI, governments and trading blocs globally have equally been in a rush to implement their own frameworks. While the UK’s more cautious approach contrasts with the EU’s all encompassing AI Act, it’s clear that regulation, stringent or not, will play a key role in ensuring organisations use high quality training data. From data source transparency and accountability, to encouraging data sharing between organisations, regulation will help to ensure that models are fair.

However, the practicality of AI regulation is proving problematic as questions of what defines high-risk AI are still being debated. The recent EU AI Act does offer credible solutions to data quality issues with new legislation focusing primarily on strengthening rules around data quality and accountability. AI providers will need to ensure that AI systems comply with risk requirements including registered data and training resources and a management system that oversees data quality.

For organisations currently using AI, implementing their own internal set of AI standards that holds people accountable for accurate data labelling, and regularly checks for any errors or inconsistencies, will help to address issues that arise.

By promoting data quality standards and AI Governance, businesses can improve the accuracy and fairness of their AI models, while still reaping the rewards of the burgeoning technology.

For more insights about AI Governance, read the related article: Governance for Generative AI

Read more insights